INDEX-LINKED CONTRACTS

indemnification of the insured or reinsured for the losses suffered. Another

way to transfer insurance risk, which is particularly important in its transfer

to the capital markets, is to link the payments to a certain value of an index

as opposed to basing it only on the reimbursement of the actual losses

suffered by a specific entity.

An example of such an index would be that of

the level of losses suffered from a hurricane in a particular region by the

whole insurance industry. Another example would be a purely parametric

one based on the intensity of a specified catastrophic event without referencing

actual insured losses.

The two main types of insurance-linked securities whose payout depends

on an index value are insurance derivatives and industry loss warranties.

Industry loss warranties (ILWs) and catastrophe derivatives (a subset of

insurance derivatives) were the first insurance-linked securities to appear.

ILWs were first introduced in the 1980s and at the time they were often

referred to as original loss warranties (OLWs) or original market loss

warranties.

The first catastrophe derivative contracts were developed in

1992 by the Chicago Board of Trade (CBOT). Both types of contract have

since evolved; their markets have evolved as well. ILWs in particular are

now playing an important role in the transfer of catastrophe risk from insurance

to capital markets.

The use of an index as a reference offers the transparency and lack of

moral hazard that are so important to investors. The ease of standardisation

is also important. One of the key advantages, not yet fully realised, is the

liquidity and price discovery that come with exchange-traded products such

as catastrophe derivatives.

This article provides an overview of ILWs and catastrophe derivatives

and explains the considerations used in their analysis by investors and

insurers. It then describes the standard indexes used in structuring these

securities and gives some specific examples.

The focus is on property insurance

risk transfer; insurance derivatives linked to mortality and longevity

are explained in the chapters dealing with mortality and longevity risk

trading, while weather derivatives are discussed in Chapter 8. Finally, the

present chapter examines the trends in the market for ILWs and catastrophe

derivatives and the expectations for its growth and evolution.

ROLE OF AN INDEX

Index-linked investments are common in the world of capital markets. Theindexes used in insurance and reinsurance risk analysis are typically related

to the level of insurance losses; these are not investable indexes and neither

are their components. A derivative contract can still be structured based on

such an index, but the underlying of the derivative contract is not a tradable

asset.

In the transfer of insurance risk, an index is chosen in such a way that

there is a direct relationship between the value of the index and the insurance

losses suffered. There is, however, a difference between the two: the

basis risk. This risk is not present when a standard reinsurance mechanism

is utilised.

While index-linked products are used primarily for the transfer of true

catastrophe risk, there is a growing trend of transferring higher-frequency

(and lower-severity) risk to the capital markets. The indexes used do not

necessarily have to track only catastrophic events.

CATASTROPHE DERIVATIVES DEFINED

In financial markets, a derivative is a contract between two parties the valueof which is dependent on the value of another financial instrument known

as an underlying asset (usually referred to simply as an underlying). A

derivative may have more than one underlying. In the broader sense, the

underlying does not have to be an asset or a function of an asset.

Catastrophe derivatives are such contracts, with an underlying being an

index reflecting the severity of catastrophic events or their impact on insurance

losses.

Futures are an example of derivative instruments. Catastrophe futures are

standardised exchange-traded contracts to pay or receive payments at a

specified time, with the value of the payment being a function of the value of an index. Unlike the case of traditional financial futures, physical delivery

of a commodity or other asset never takes place.

Options are another example of financial derivatives; they involve the right to buy (call option)

or sell (put option) an underlying asset at a predetermined price (strike). In

the context of catastrophe derivatives, of particular importance are call

spreads, which are the combination of buying a call at a certain strike price

and selling a call on the same underlying at a higher strike, with the same

expiration date.

The calls can be on catastrophe futures. Using a call spread

limits the amount of potential payout, making the contract somewhat

similar to reinsurance, where each protection layer has its own coverage

limit.

Binary options provide for either a fixed payment at expiration or,

depending on the value of the underlying, no payment at all. In other words,

there are only two possible outcomes. They are also referred to as digital

options.

There are numerous ways that catastrophe derivatives can be structured.

The payout may depend on a hurricane of specific magnitude making a

landfall in a certain area; on the value of total cumulative losses from hurricanes

to the insurance industry over a certain period of time for a specified

geographical region; or on the value of an index tracking the severity of an

earthquake at several locations.

The flexibility in structuring an over-thecounter

(OTC) derivative allows hedgers to minimise their basis risk. At the

same time, there are significant advantages to using standard instruments

that can be traded on an exchange.

Exchange-traded derivatives are more

liquid, allow for quicker and cheaper execution, provide an effective mechanism

for managing credit risk and bring price transparency to the market,

all of which are essential for market growth.

Derivatives versus reinsurance

All insurance and reinsurance contracts may be seen as derivatives, albeitnot recognised as such by accounting rules. Technically, they would be call

spreads, which corresponds to policy limits in insurance. From the point of

view of the party being paid for assuming the risk, an excess-of-loss reinsurance

contract can be seen as being equivalent to selling a call with the

strike at the attachment point and buying a call with the strike equal to the

sum of the attachment point and the policy limit. The “underlying” in this

case is the level of insurance losses.

The true derivatives such as insurance catastrophe derivatives have a

better defined and stable underlying and are accounted for as financial derivative products. Insurance accounting is not allowed for these products.

This topic will be revisited later in the article.

INDUSTRY LOSS WARRANTIES DEFINED

The term “industry loss warranty” (ILW) has been used to describe twotypes of contract, one of them a derivative and the other a reinsurance

contract. In its most common form, an ILW is a double-trigger reinsurance

contract. Both trigger levels have to be exceeded for the contract to pay. The

first is the standard indemnity trigger of the reinsured suffering an insured

loss at a certain level, that is, the ultimate net loss (UNL) trigger.

The second is that of industry losses or some other index level being exceeded. The

index of industry losses can be, for example, the one determined by the

Property Claim Services (PCS) unit of Insurance Services Office, Inc. (ISO).

An ILW in a pure derivative form is a derivative contract with the payout

dependent only on the industry-based or some other trigger as opposed to

the actual insurance losses of the hedger purchasing the protection. Even

though labelled an ILW, it is really an OTC derivative such as the products

described above.

The choice between the ILW reinsurance and derivative forms of protection

has significant accounting implications for the hedger. It is typically

beneficial for the hedger to choose a contract that can be accounted for as

reinsurance, with all the associated advantages. This is why the vast

majority of ILW transactions are done in the form of reinsurance.

The majority of ILWs have a binary payout, and the full amount is paid

once the index-based trigger has been activated. (We assume that the UNL

trigger condition, if present, has been met.) However, some ILW contracts

have non-binary, linear payouts that depend on the level of the index above

the triggering level. There seems to be general market growth in all of these

categories.

MARKET SIZE

While the size of the catastrophe bond market is known, it is difficult to estimatethe volume of the industry loss warranty and catastrophe derivative

market. The OTC transactions are rarely disclosed, leading to a wide range

of estimates of market size. The only part of the market with readily available

data is that of exchange-traded catastrophe derivatives. The exchanges

report the open interest on each of their products.

While its size is not very big (with no estimates exceeding US$10 billion

in limits), this market is important as a barometer of reinsurance rates and their movements. Exchange-traded products bring price transparency to the

traditionally secretive reinsurance market. The growing activity of ILW

brokers is leading to increased transparency in the OTC markets as well.

While not directly comparable to traditional reinsurance contracts, catastrophe

derivatives and ILWs provide an important reference point in

pricing reinsurance protection.

It is likely that in terms of total limits, the ILW and catastrophe derivative

market is between US$5 and US$10 billion. This number does not include

catastrophe and other insurance derivatives linked to mortality and

longevity; only property and casualty insurance risks are included.

The market has been growing, but the growth has not been steady. Similar to the

retro market (of which some consider this market a part), its size is particularly

prone to fluctuations based on the rate levels in the traditional

reinsurance market. The one part of the market that we can see growing is

that of exchange-traded insurance derivatives. However, exchange-traded

products are currently a relatively small part of the overall marketplace.

KEY INDEXES

A number of indexes have been used in structuring insurance derivativesand ILW transactions. They include indexes tied directly to insurance losses

and those tied to physical parameters of events that affect insurance losses.

The overview below focuses on the indexes providing the most credible

information on the level of insured industry-level property losses due to

natural catastrophes.

Property Claim Services

PCS, a unit of ISO, collects, estimates and reports data on insured lossesfrom catastrophic events in the US, Puerto Rico and the US Virgin Islands.

While every single provider of catastrophe-insured loss data in the world

has at times been criticised for supposed inaccuracies or delays in reporting,

PCS is generally believed to be the most reliable and accurate.

In the half a century since it was established, the organisation has developed sound

procedures for data collection and loss estimation. It has the ability to collect,

on a confidential basis, data from a very large number of insurance carriers

as well as from residual market vehicles such as joint underwriting associations.

Other data sources are used as well. Insurance coverage limits, coinsurance, deductible amounts and other factors are taken into account by PCS in estimating insured losses. Estimates are provided for every catastrophe which is defined by PCS as an event that causes US$25 million or more in direct insured property losses and affects a significant number of

policyholders and insurers. Data for both personal and commercial lines of

business is included.

Loss estimates are usually reported within two weeks of the occurrence of

a PCS-designated catastrophe (and PCS provides the event with a serial

number). For events with likely total insured property loss in excess of

US$250 million, PCS conducts re-surveys and reports their results approximately

every 60 days until it believes that the estimate reasonably reflects

insured industry loss.

These larger events are the ones of interest for catastrophe

derivatives and ILWs. Figure 5.3 shows an example of PCS loss

estimates for Hurricane Ike at various time points, in reference to the settlement

prices for two of the exchange-traded catastrophe derivatives that use

PCS-based triggers.

While general catastrophe loss data is available dating back to the establishment

of PCS in 1949, the more detailed data by geographic territory and

insurance business line is available for only the more recent years.

In Table 5.1, opposite, we can see the development of industry-insured

loss estimates for the largest catastrophic events since 2001.

The time between the occurrence of a catastrophic event and reporting of the final

estimate could vary significantly depending on the event and complexity of

the data collection and extrapolation. Of the events shown in Table 5.1,

Hurricane Katrina had 10 re-survey estimates issued, with the last one

almost two years after the event occurrence.

However, the changes over the year preceding the reporting of the final estimate were minuscule. The 2008 Hurricane Gustav had the final estimate issued in less than five months,

with that final number not changing from the first re-survey estimate.

Insured loss estimates for catastrophes that happened before those shown

in Table 5.1 often lacked precision, even though they did not take longer to

obtain. For the 1994 Northridge earthquake in California, the preliminary

estimate increased 80% in two months, and the final estimate was five times greater than the original number.

However, we have to recognise the fact

that the methodologies employed by PCS have been changing; current estimation

techniques are more reliable given the possibly disproportionate

focus on the actual reported numbers years ago.

Catastrophe loss indexes based on PCS data are the basis for many ILW

and catastrophe derivative transactions, as well as for catastrophe bonds

and other insurance-linked securities. Both single-event and cumulative

catastrophe loss triggers can be based on PCS indexes.

Perils

Incorporated in 2009, PERILS AG was created to provide information onindustry-insured losses for catastrophic events in Europe, similar to the way

PCS provides information in the US. The plans call for ultimate expansion

of catastrophe data reporting beyond Europe to other regions outside the

US.

The shareholders of the company are major insurance and reinsurance

companies and a reinsurance intermediary, ensuring that a large segment of

catastrophe loss data will be provided to PERILS. The information is

provided anonymously by insurance companies and includes exposure data

(expressed as sums insured) by CRESTA zone and by country, property

premium data by country, and catastrophic event loss data by CRESTA zone

and by country.

The data is aggregated and extrapolated to the whole insurance

industry based primarily on known premium volumes. Industry

exposure and catastrophe loss data are examined for reasonableness and

tested against information from other sources. The methodology is still

evolving.

In December 2009, PERILS launched an industry loss index service for

European windstorm catastrophic events. The data can be used for industry

loss warranties (ILW) and broader insurance-linked securities (ILS) transactions

involving the use of industry losses as a trigger. Table 5.2 provides a

description of the PERILS indexes for ILS transactions.

ILW reinsurance transactions based on a PERILS catastrophe loss index

have been done shortly after the introduction of the indexes. The scope and

number of the indexes are expected to grow. The data collected by PERILS

will allow the company to create customised indexes for bespoke transactions.

The reporting is done in euros as opposed to US dollars.

Swiss Re and Munich Re indexes

The two largest reinsurance companies, Swiss Re and Munich Re, have beencompiling industry loss estimates for catastrophic events for decades. Swiss

Re’s sigma, in particular, has been compiling very reliable loss estimates for

catastrophe events worldwide, including manmade catastrophes. Munich

Re has assembled a very large inventory of catastrophic events in its

NatCatSERVICE loss database. It is similar to Swiss Re’s sigma in its broad

scope but does not include manmade catastrophes. Economic losses from

catastrophic events are often estimated in addition to the insured losses.

ILW transactions have been performed based on both Swiss Re’s sigma and

Munich Re’s NatCatSERVICE.

It is likely that for the windstorm peril Swiss Re’s and Munich Re’s estimates

are not going to be used for ILS transactions, since PERILS provides a

credible independent alternative. Other perils, and other regions around the

world usually do not have such an alternative, and it is likely that Swiss Re

and Munich Re indexes will continue to be used in structuring ILW and

other transactions. This practice may change in the future if PERILS implements

its ambitious expansion plans.

CME hurricane index

This index has been developed specifically to facilitate catastrophe derivativetrading. The index, based purely on the physical characteristics of a

hurricane event, aims to provide a measure of insured losses without the use

of any actual loss data such as reported industry losses. While the index has been developed

for North Atlantic hurricanes, in theory the same or a similar approach can

be used for cyclone events elsewhere.

Mortality and longevity indexes

A number of indexes tracking population mortality or longevity have beendeveloped for the express purpose of structuring derivative transactions.

These indexes are usually based on general population mortality as opposed

to that of the insured segment of the population. They can be used for

managing the risk of catastrophic mortality jumps affecting insurance

companies, or the longevity risk affecting pension funds, annuity product

providers and governments.

The CME hurricane index (CHI) was originally developed by reinsurance

broker Carvill and is still usually referred to as the Carvill index. CME Group

currently owns all rights to it.

The standard Saffir–Simpson hurricane scale is discrete and provides

only five values (from 1 to 5) based on hurricane sustained speed. Having

only five values can be seen as lacking in precision required for more accurate

estimation of potential losses. In addition, the Saffir–Simpson scale

does not differentiate between hurricanes of different sizes as measured by

the radius of the hurricane. Hurricane size can have a significant effect on

the resultant insurance losses. CHI attempts to improve on the

Saffir–Simpson scale by providing a continuous (as opposed to discrete)

measure of sustained wind speeds and by incorporating the hurricane size

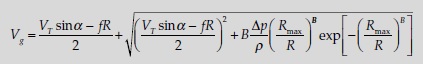

in the calculation. The following formula is used for calculating CHI

V here is the maximum sustained wind speed, while R is the distance that

hurricane-force winds extend from the centre of the hurricane. The denominators

in the ratios are the reference values. V0 is equal to 74 m.p.h., which

is the threshold between a tropical storm and a hurricane as defined by the

Saffir–Simpson scale used by the National Oceanic and Atmospheric

Administration (NOAA) of the US Department of Commerce. The index is

used only for hurricane-force wind speeds, that is, for V equal to or greater

than 74 m.p.h. R0 is equal to 60 miles, which is a somewhat arbitrarily

chosen value intended to represent the radius of an average hurricane in the North Atlantic.

EQECAT is the current official calculation agent of the CHI for CME

Group. In calculating the value of the index used for contract settlement,

EQECAT utilises official data from NOAA. If some of the data is missing,

which would likely involve the radius of hurricane-force winds, EQECAT is

to use its best efforts to estimate the missing values. There are additional

rules governing the determination of which of the public advisories (from

NOAA) is to be used, what constitutes a hurricane landfall, and how

multiple landfalls of the same hurricane are treated.

There is also an index tracking mortality of a specific group of individuals

who have settled their life insurance policies, as opposed to the mortality of

the general population. Life-settlement mortality tracked by such an index

is very different from and not to be confused with mortality of the insured

segment of the population.

This article focuses on non-life insurance derivatives and ILWs.

Mortality and longevity indexes and the insurance derivative products

based on them are described in detail in the chapters dealing with securitised

life insurance risk and the hedging of longevity risk.

MODELLING INDUSTRY LOSSES

Modelling losses for the whole industry is performed using the tools that areused for modelling losses for a portfolio of risks. Industry loss estimates are

significantly more stable than those of underwriting portfolios of individual

insurance companies. Data such as premium volume provides additional

information that assists in making better predictions.

In addition, using probabilistic estimates of industry losses is a natural way of comparing

different modelling tools. An outlier would be quickly noticed and need to

be explained. Expected annual losses for peak hazards produced by

different modelling tools do not significantly diverge. The overall probability

distributions, however, can differ considerably.

As an example, the following table shows estimated probabilities of insurance

industry losses, as would be calculated by PCS, from a single catastrophic

event exceeding a certain level that is used as trigger for catastrophe

derivatives and industry loss warranties. The probabilities do not correspond

directly to the results of any of the standard catastrophe models. The

assumption based on significantly heightened hurricane activity and warm

sea surface temperature is used instead of utilising the entire historical event

catalogue. This explains the higher than usually assumed probabilities of

exceedance.

THE ILW MARKET

The ILW market is very similar to the traditional reinsurance market in thatit is facilitated, almost exclusively, by reinsurance brokers. The three largest

reinsurance brokers, Aon Re, Guy Carpenter and Willis Re, account for

almost all of the market volume. There are several small brokers that participate

in the ILW market, but their share is small. Investment banks, despite

their role in ILS markets in general, have limited involvement in ILWs.

The vast majority of ILWs provide protection against standard risks of

wind damage and earthquakes in the US, wind in Europe and earthquakes

in Japan. All natural perils coverage for all of these territories is also

common. The US territory can be split into several pieces, of which Florida

has the most significant exposure to hurricane risk. In addition, second- and

third-event contracts are often quoted.

For these perils, in the US the standard

index is PCS losses, with trigger points ranging from as low as US$5

billion in industry losses to as high as US$120 billion or even greater to

provide protection against truly catastrophic losses.

Figure 5.1, opposite, illustrates indicative pricing for 12-month ILWs

covering the wind and flood risk in all of the US. The prices, expressed as a

percentage of the limit, are shown for first-event contracts at four trigger

levels: US$20 billion, US$30 billion, US$40 billion and US$50 billion. The

trigger levels are chosen to correspond to those used later in the chapter in

the illustration of price levels for the IFEX contracts covering substantially

the same catastrophe events.

The prices can be seen to fluctuate dramatically depending on the market

conditions. The highest levels were achieved following the Katrina–Rita–

Wilma hurricane season of 2005. Another spike followed the 2008 hurricane

losses combined with the capital depletion due to the financial crisis. The

expectations of even higher rates immediately before the hurricane season

of 2009, however, did not materialise.

Structuring an ILW

Industry loss warranties have become largely standardised in terms of theirtypical provisions and legal documentation. A common ILW agreement will

be structured to provide protection in case of catastrophic losses due to a

natural catastrophe such as a hurricane or an earthquake.

The first step will be deciding on the appropriate index, which in the US

can be a PCS index. Once the index is chosen, the attachment point has to be

determined, as well as the protection limit.

As the value of losses from a

catastrophic event is not immediately known and an organisation such as

PCS will need time to provide a reliable estimate, a reporting period needs

to be specified to allow for loss development. This period can be, for

example, 24 months from the date of the loss or 18 months from end of the

risk term.

The contract risk term is generally 12 months or shorter. Some

ILWs provide protection only during the hurricane season. For earthquake

protection, the 12-month term is standard. Multi-year contracts are rare.

As an example of the legal language in a contract providing protection

against catastrophic losses due to an earthquake, the contract might “indemnify

the Reinsured for all losses, arising from earthquake and fire following

such earthquake, in respect of all policies and/or contracts of insurance

and/or reinsurance, including workers’ compensation business written or

assumed by the Reinsured, occurring within the territorial scope hereon.

This Reinsurance is to pay in the event of an Insured Market Loss for property

business arising out of the same event being equal to or greater than US$20 billion (a ‘Qualifying Event’). For purposes of determining the

Insured Market Loss, the parties hereto shall rely on the figures published

by the Property Claim Services (PCS) unit of the Insurance Services Office.”

The US$20 billion is specified as an example of the trigger level.

The limits can be specified in the manner typical of an excess-of-loss reinsurance

contract, with the possible contract language stipulating that the

reinsured will be paid up to a certain US dollar amount for “ultimate net loss

each and every loss and/or series thereof arising out of a Qualifying Event

in excess of” an agreed-upon “ultimate net loss each and every loss and/or

series thereof arising out of a Qualifying Event”.

A reinstatement provision

usually would not be included, but there are other ways to assure continuing

protection after a loss event, including purchasing second- or

multiple-event coverage, which can also be in the form of an ILW.

While the reinsurance agreement requires that both conditions be satisfied

– that is, only actual losses be reimbursed and only when the industry

losses exceed a predetermined threshold – the agreements tend to be structured

so that only the latter condition determines the payout.

The attachment point for the UNL is generally chosen at a very low level,

ensuring that exceeding the industry loss trigger level will happen only if

the reinsured suffers significant losses. There is, however, a chance of the

contract being triggered but the covered UNL being below the full reinsurance

limit.

Arguably the most important element of an ILW contract is the price paid

for the protection provided. The price would typically be expressed as rate

on line (RoL), that is, the ratio of the protection cost (premium) to the protection

limit provided. The payment is often made upfront by the buyer of the

protection.

An important issue in structuring an ILW is management of credit risk.

This topic is covered later in the chapter. Collateralisation, either full or

partial, might be required to assure payment. The need for collateralisation

is more important when the protection is provided by investors as opposed

to a rated reinsurance company.

ISDA US WIND SWAP CONFIRMATION TEMPLATE

In 2009, the International Swaps and Derivatives Association (ISDA)published a swap confirmation template to facilitate and standardise the

documentation of natural-catastrophe swaps referencing US wind events.

Prior to that, several templates existed in the marketplace. The ISDA

template is based on the one originally developed by Swiss Re. The template

uses PCS estimates for insurance industry loss data for catastrophic wind

events affecting the US.

The covered territory is defined as all of the US,

including the District of Columbia, Puerto Rico and US Virgin Islands. The

option of choosing a subset of this territory also exists. It allows the choice

of three types of covered event: USA Wind Event 1, USA Wind Event 2 and

USA Wind Event 3. The first type is the broadest and includes all wind

events that would be included in the PCS Loss Report.

The second specifically

excludes named tropical storms, typhoons and hurricanes, while the

third includes only named tropical storms, typhoons and hurricanes. As in

all of the swap confirmations used in the past for US wind, flood following

covered perils is included in the damage calculation. The template clarifies

the treatment of workers’ compensation losses, and whether loss-adjustment

expenses related to such losses are included.

It allows for both binary

and non-binary (linear) payments in the event of a covered loss.

The ISDA template specifically states that the transaction is not a contract

of insurance and that there is no insurable loss requirement. The structure is

that of a pure financial derivative without any insurance component.

While the template brings legal documentation standardisation to these

OTC transactions, it allows a significant degree of customisation to minimise

the basis risk of the hedging party; this degree of customisation is not

possible when using only exchange-traded instruments.

IFEX CATASTROPHE DERIVATIVES

Of the exchange-traded catastrophe derivatives, IFEX event-linked futures(ELF) are one of the two most common, the other being CME catastrophe

derivatives. IFEX is the Insurance Futures Exchange, which developed

(together with Deutsche Bank) event-linked futures. IFEX event-linked

futures are traded on the Chicago Climate Futures Exchange (CCFE), a relatively

new exchange focused on environmental financial instruments.

CCFE is owned by Climate Exchange PLC, a UK publicly traded company. The

founder of CCFE, Richard L. Sandor, played a key role in the introduction

of the first catastrophe derivative products in the early 1990s. Even though

the products were well designed, at the time the insurance industry was not

ready for such a radical innovation as trading insurance risk.

In addition to the need for education, the industry then did not have proper tools to quantify

catastrophe risk or to estimate the level of basis risk created by the use

of index-linked products as opposed to traditional reinsurance.

The CCFE IFEX contracts have been designed to replicate, as far as

possible, the better-known and accepted ILW contracts.

The two primary differences between a traditional ILW and the corresponding IFEX contract

are, first, that IFEX event-linked futures are financial derivatives and not

reinsurance, and, second, that IFEX contracts provide an effective way to

minimise if not eliminate the counterparty credit risk present in many ILW

transactions. The terms “IFEX contract” and “ELF contract” are often used

interchangeably.